Seminar Series on Meaningful Human-AI Interactions for a Digital Society – #3: Defining “Meaningful” Human-AI Interactions for a Digital Society

September 3, 2024 - 12:00 - 13:30

A Seminar Series on Meaningful Human-AI Interactions for a Digital Society

Interested in how to design AI to be meaningful, transparent, and ethical? Then join us for the continuation of our Meaningful-Human AI Interaction event series! Building off our previous events, we will explore how to define meaningful human-AI interactions and deploy AI responsibly with a group of interdisciplinary experts.

Event #3: Promoting Meaningful Human-AI Interactions: Societal and Legislative Perspectives

Explore how to define and promote meaningful human-AI interactions with Marc Steen (TNO). Marc will present an “Extend Error Matrix” that understands AI in its societal context and can help us define meaning and promote interdisciplinary collaboration towards responsible AI. The session will be moderated by Stefan Buijsman (TU Delft) and Birna van Riemsdijk (University of Twente).

Speaker

Marc Steen, Senior Research Scientist at TNO: Responsible Innovation

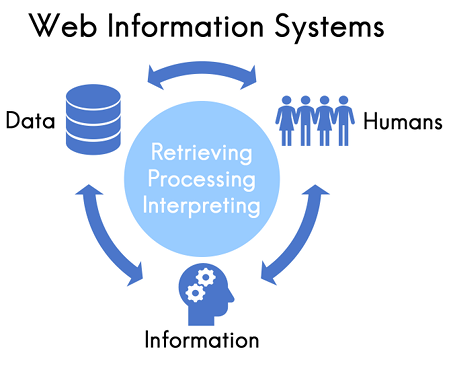

Talk: We need better images of AI and better conversations about AI

This presentation concerns a critique of the ways in which the people involved in the development and application of AI systems (and indeed: journalists and the general public) often visualize and talk about AI systems. Often, they visualize such systems as shiny humanoid robots or as free-floating electronic brains. Such images convey misleading messages; as if AI works independently of people and can reason in ways superior to people. Instead, we propose to visualize AI systems as parts of larger, sociotechnical systems. Here, we can learn, for example, from cybernetics. Similarly, we propose that the people involved in the design and deployment of an algorithm would need to extend their conversations beyond the four boxes of the Error Matrix, for example, to critically discuss false positives and false negatives. We present two thought experiments, with one practical example in each. We propose to understand, visualize, and talk about AI systems in relation to a larger, complex reality. We also propose to enable people from diverse disciplines to collaborate around boundary objects, for example: a drawing of an AI system in its sociotechnical context; or an ‘extended’ Error Matrix. Such interventions can promote meaningful human control, transparency, and fairness in the design and deployment of AI systems.